By Andy Ruth, Notable Architect

(Part 1 appeared Tuesday here.)

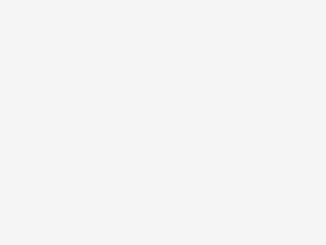

As you are modeling a Zero Trust initiative into workstreams, a logical separation is by access control and asset protection. Access control is focused on the request, so identity, device, and network validations. This is like a peephole and lock for the front door. Asset protection is focused on fulfilling the request through the server/service, apps, and data environment. This is like locking up the silverware and valuables in the house. The policy engine and policy enforcement points provide automated control and control points for enforcing access control and asset protection. This is like adding security cameras inside and outside of the house. This article is focused on modeling the asset protection controls of the environment.

Modeling Asset Protection Workstreams

A Zero Trust strategy describes the transformation from a network-centric, implicit trust model to a data-centric explicit trust model that is Internet and digital economy ready. A Zero Trust strategy requires all IT assets to be cataloged with a defined business value. The goal is to design access control and asset protection so that automated rules enforcement can be defined and implemented. The asset catalog with business value data enables operations to prioritize detection, response, and recovery efforts:

Modeling a Data Workstream

The goal when modeling the data environment for a Zero Trust initiative is to have the information available to decide what data should be available when, where, and by whom.

That requires you to know what data you have, its value to the business, and the risk level if lost. The information is used to inform an automated rules engine that enforces governance based on the state of the data request journey. It is not to define or modify a data model.

Hopefully, you already have this information catalogued. From a digital asset perspective, most companies think of their data as their crown jewels so the data pillar might be the most important pillar.

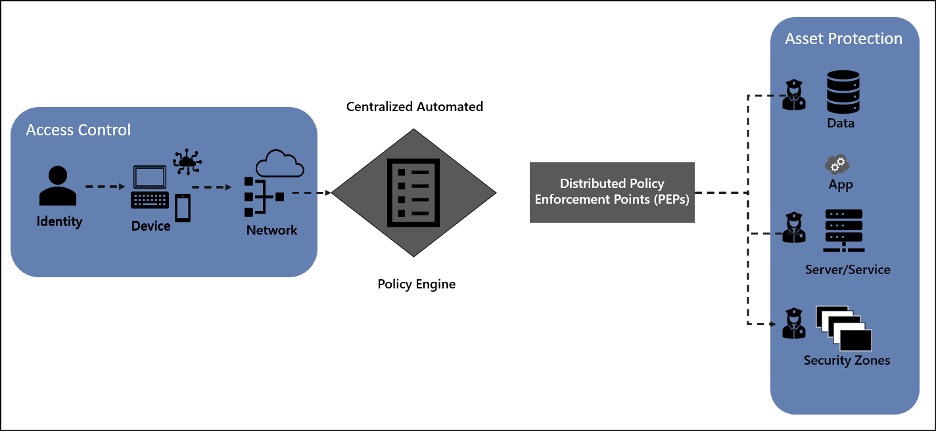

One challenge with data is that applications supply data access. Many applications are not written to support modern authentication mechanisms and don’t handle the protocols needed to integrate with contemporary data environments so the applications might not support a Zero Trust data model. Hopefully, you’re already experimenting with current mechanisms for your microservice environment. But, if not, as with any elephant, you eat it one bite at a time. An approach that might make modeling easier is to consider this mental model to start:

The goal of a Zero Trust strategy is to get the right data to the right identity (and only the right identity) by enforcing the governance model. The gatekeeper to the data should always be through an application. The device, network, and server/service states function as constraints to what data is accessible. Some keys to modeling this workstream:

- Get principles in place to drive your goals for data access being aligned with your zero trust goals.

- Specifically, make sure there is a principle that direct connection to data sources must be through a programmatic interface and direct connections are cause for immediate termination.

- Make sure there is another principle that states access control requires two certificates, such as JWT (JSON web token) for user context and API (Application Programming Interface) auth-n via SPN (service principal names).

- Make sure your data classification strategy supports your Zero Trust objectives.

- Verify data models are complete and up to date.

- Decide the level of automation in place to control unstructured (tacit) data.

Two potential goals for your modeling exercise could be to:

- define an experiment to create an application development pattern that uses current authentication methods. A common framework is OAuth 2.0 covered in RFC6749. Marcus Nilsson has an interesting article that provides some basic insights.

- catalog all data assets in a manner that captures their business value, both from the data’s value to business operation and from the potential risk if lost. The catalog is used to create governance that’s enforced automatically.

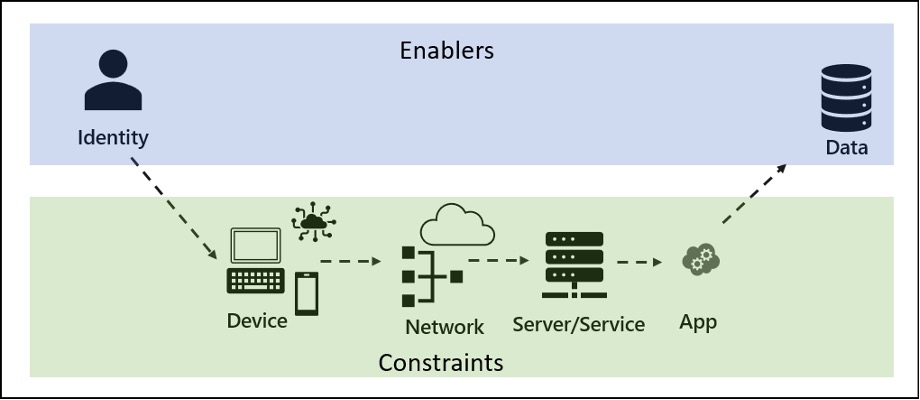

For the experiment, we recommend a direct report of the chief data architect work with a development team to run the experiment. The approach might be to create a microservice design where authentication can be outsourced to multiple external identity providers without requiring each microservice to be coupled to each of the external identity providers. The goal of the experiment might be to validate that the principle is worded correctly, your pattern works, and that the impact to feature release velocity isn’t excessive. By calling it an experiment you’ll reduce friction with the development team as they run experiments all the time and you won’t be seen as coming in and telling them what to do. This approach should also help to build a rapport with the development teams as partners.

You also need leadership endorsement. Setting the goals as validation of the approach and verifying velocity of feature delivery isn’t impacted might be better received than having a goal of more secure. As soon as you ask leadership for a team to do anything, leadership starts thinking three developers is equal to $1 million dollars (fully burdened) and teams are typically about six people, so you’re asking for $2 million (mental cost models they think in might be higher with inflation and change in the workforce over recent years). And they lose six bodies delivering new features. If you can set the time of the experiment to 90 days (one quarter), then they’ll consider it a $500,000 experiment. By stating the goal is to validate the approach and minimize velocity (rather than promising features or saying it’ll be more secure) the leadership is more likely to endorse you, especially if you can say the experiment will be on a set of features that are already planned. Then you are simply doing the same thing in a different way and the leadership will still get their features. By setting it to 90 days, they are less likely to doubt you can accomplish the goal. Longer might cost too much and shorter might raise doubt that you can do what you say in a shorter time. And the business rhythm is quarter, half, and annual, so shorter than a quarter is irrelevant to getting the endorsement.

For your experiment, you might start with the designs that Marcus Nilsson uses or one that specifically mirrors internal and external separation of authentication and function, and limits integration requirements for every microservice:

With your experiment, you want to make sure there is a high probability of success, that the reference implementation can be repeatedly used by all the development teams with limited training, and, most importantly, that it provides the Zero Trust alignment without negative business impact. The experiment also starts growing internal competency and provides the insight needed to create training for all developers.

For the data catalog effort, start assessing how mature your data environment is, and how defined and documented the data structure is. Since separate groups are likely to handle the structured data and the unstructured data, you can likely run concurrent efforts to increase velocity towards achieving Zero Trust goals. We recommend that you model the structured and unstructured data separately and that you model the structured data first if you can only model one at a time. Hopefully, you already have a list of authoritative data sources, entities, and objects so this will go quickly. If not, there is no better time than now to collect that data.

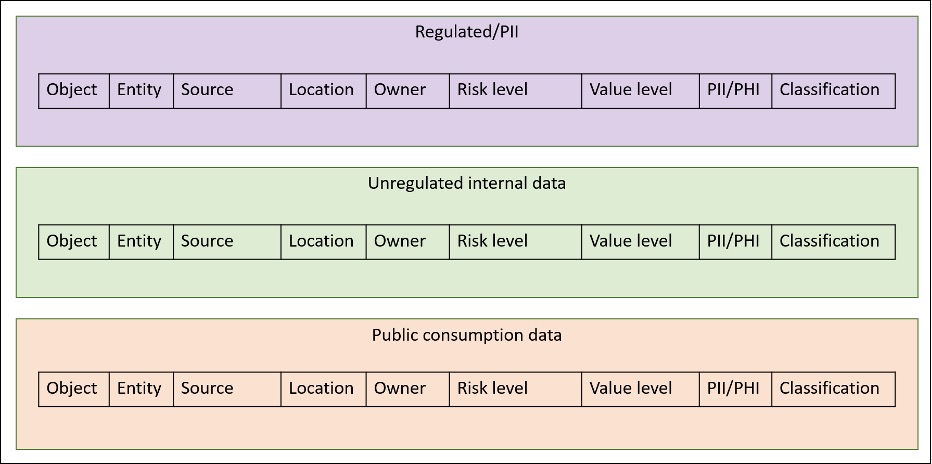

To start your structured data model, consider making three boxes:

- Regulated/PII data

- Unregulated internal data

- Public consumption data

This effort is primarily meant to find data objects and entities, and their sources. We recommend that, if possible, you categorize your data by object, and capture the entity and authoritative database as metadata. If there are any data sources you do not have metadata for, this exercise is a wonderful opportunity to collect any missing data. Your starting point may look like this:

Organizing data by object will potentially take longer but supplies the level of detail needed to enforce more granular control such as is available when using attribute-based (ABAC) rather than role-based (RBAC) access control. The decision to use ABAC or RBAC is an architectural decision that might differ from solution to solution so having the data collected gives you more flexibility in solution design. What data you collect and how you organize it will, of course, be specific to your environment, but this diagram should give you a starting point for setting up your collection process.

The tacit or unstructured data is likely managed by the operations and security teams, and the data scientists and data architects might not have much insight. If there are siloes in your environment that separate the teams responsible for the structured and the unstructured data, we recommend you use these exercises to build rapport with both teams and establish a collaboration communications bridge for the two factions.

For your tacit or unstructured data, your goal is still to ensure that the right data is available to the right identity when and where needed, so long as the request falls within the governance boundaries for tacit information. We recommend using a comprehensive suite of tools for unstructured data if your organization has concerns around controlling that data.

You can take two approaches to start your modeling:

- You can choose a tool suite and use the features and options available in the tools to reverse engineer your organization.

- You can build your model from scratch.

For example, you can use the Microsoft stack of tools for productivity – Microsoft Purview and Priva (tools or documentation) can guide your modeling. The tools have a quick start that should show what might be the most relevant objects in your model. The documentation is free and easy enough to skim so you can also use that information to build your model.

If you prefer to build your own model from scratch, we recommend you start by building a table with the common types of data and adding a list of metadata relevant to your organization. Once you have a significant list built, you can organize the data by the productivity tool(s) associated with it. For the common types of tacit data, consider using this list as a starter set:

- Emails

- Social media posts

- Word documents

- PDF files

- PowerPoint presentations

- Audio files

- Video files

- Images

- Website pages

The metadata that might be relevant includes:

- Key Identifier – This could be a unique filename or ID, URL, or other unique identifier you discover as you collect information.

- Source – Where the data originated, such as the author of a document, the sender of an email, or the creator of a video.

- Creation Date – When the data object was created. This might be helpful to help find the most recent version of the document and help with harvesting intellectual property (IP).

- Last Modified Date – The most recent date the data object was altered.

- Location – Where the data is stored, which could be a file path, a database identifier, or a URL.

- Format/Type – The format of the data, such as PDF, MP3, MP4, DOCX, JPEG, etc.

- Size – The size of the data object, typically in kilobytes (KB), megabytes (MB), or gigabytes (GB).

- Access Permissions – Who can view, edit, or delete the data.

- Associated Project/Department – Information about which project or department the data is associated with.

- Tags/Keywords – Words or phrases that describe the content of the data object to facilitate search and categorization.

- Classification – Classifications vary across organizations. If you don’t have a data classification in place, consider starting with public, internal, confidential, highly sensitive.

- Version – If the data object has multiple versions, keep track of the version number.

As you model unstructured data, you must start with a clear idea of what your goals are. Just looking at the types of data and the metadata, you can recognize that the information might supply value as IP, introduce risk of exposure of company confidential data, break regulatory and compliance regulations, or could generate insider risks.

The goals you set for unstructured data will help define what you need to collect. However, if the collection of additional data doesn’t extend the time or cost of data collection, the information might be useful in later phases of your Zero Trust initiative.

With the data pillar, it is easy to forget the goal is to catalog the data in a manner that governance can be written for access. Use whatever approach you need to remind participants that you are not defining a data model, you are collecting metadata for data types and sources to define governance that can be automated.