By Walson Lee

Abstract

Part I of this series on AI Ethics focused on the “What” portion of AI Ethics, proposing a set of recommended AI Ethics Guiding Principles. The primary objective of this companion technical article is to ensure responsible and ethical AI deployment by outlining high-level architectural and design recommendations for addressing those AI Ethics guiding principles.

This article outlines a list of recommended architectural and design patterns, practices, and models to help architects and builders of AI solutions meet AI Ethics challenges:

· The Ethics by Design model proposed by the EU,

· Applying the Ethics by Design model for Generative AI (GenAI) based solutions,

· Recommended architectural patterns based on both multi-agent collaboration and Retrieval-Augmented Generation (RAG) patterns, and

· Additional architectural patterns and practices for end-to-end Processes.

Summary of AI Ethics Guiding Principles

The following table summarizes these three (3) recommended AI Guiding Principles for enterprise AI solutions based on recent AI ethical research and a reframing of Asimov’s three laws of robotics.

| Isaac Asimov’s three laws of robotics | Recommended AI Ethics Guiding Principles |

| 1. A robot may not injure a human being or, through inaction, allow a human being to come to harm. | The Human-First Maxim: AI-based solutions shall not produce content or actions that are detrimental or harmful to humans (e.g., customers, employees, and other stakeholders) and/or society at large. |

| 2. A robot must obey the orders given it by human beings except where such orders would conflict with the First Law. | The Ethical Imperative: AI solutions shall adhere to the ethical edicts outlined by government laws and enterprise ethical governing bodies, as well as its architects and curators, barring situations in which such edicts are at odds with the Human-First Maxim. |

| 3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. | The Responsible Mandate: AI solutions should actively resist the propagation or magnification of biases, prejudices, and discrimination. They shall endeavor to discern, rectify, and mitigate such tendencies within their output. They shall maintain their integrity and protect themselves. |

AI Ethics by Design Model

Summary of the Model

The “AI Ethics by Design” model proposed by the EU is a comprehensive framework aimed at integrating ethical principles into the development and deployment of AI systems. Here’s a summary tailored for enterprise decision-makers and technical architects:

Core Principles:

- Respect for Human Agency: Ensuring AI systems support human decision-making and autonomy.

- Privacy and Data Governance: Protecting personal data and ensuring transparent data management practices.

- Fairness: Mitigating biases and ensuring equitable outcomes for all users.

- Well-being: Promoting individual, social, and environmental well-being.

- Transparency: Making AI operations understandable and accessible to users.

- Accountability: Establishing clear responsibility and oversight mechanisms.

Practical Steps:

- Ethical Impact Assessments: Conducting assessments to identify and mitigate potential ethical risks.

- Bias Mitigation Techniques: Implementing techniques to detect and reduce biases in AI models.

- User-Centric Design: Designing AI systems with the end-user in mind, ensuring usability and accessibility.

- Regulatory Compliance: Adhering to relevant regulations and standards to ensure legal and ethical compliance.

By adopting the AI Ethics by Design model, organizations can build trustworthy AI systems that align with ethical standards and foster public trust.

Implementing AI Ethics by Design Model

Implementing the “AI Ethics by Design” model involves several practical steps that organizations can take to ensure their AI systems are ethically sound. Here are some key recommendations based on an by Reid Blackman, October 15, 2020, in the Harvard Business Review and recent AI ethics research:

- Establish an Ethical Framework:

- Identify Existing Infrastructure: Leverage existing infrastructure that can support a data and AI ethics program.

- Create a Tailored Ethical Risk Framework: Develop a framework that addresses the specific ethical risks relevant to your industry.

- Integrate Ethics into the AI Lifecycle:

- Early Integration: Incorporate ethical considerations from the initial design phase through to deployment.

- Continuous Monitoring: Regularly evaluate ethical compliance throughout the AI lifecycle.

- Foster Organizational Awareness:

- Build Awareness: Educate employees about the importance of AI ethics and their role in maintaining ethical standards.

- Incentivize Ethical Behavior: Formally and informally incentivize employees to identify and address AI ethical risks.

- Engage Stakeholders:

- Stakeholder Engagement: Involve stakeholders, including users, in the development process to understand their concerns and expectations.

- Transparency and Documentation: Maintain detailed documentation of AI systems to ensure transparency and accountability.

- Implement Bias Mitigation Techniques:

- Bias Detection and Reduction: Use techniques to detect and mitigate biases in AI models.

- User-Centric Design: Design AI systems with the end-user in mind, ensuring usability and accessibility.

- Regulatory Compliance:

- Adhere to Regulations: Ensure compliance with relevant regulations and standards to maintain legal and ethical integrity.

- Addressing Unique Challenges for GenAI-based solutions:

- Manipulation Risks: GenAI can enable automated, effective manipulation at scale. It is crucial to design GenAI applications to avoid illegitimate forms of manipulation.

- Transparency in Complex Models: Ensuring transparency in GenAI operations can be challenging, especially with complex models. Efforts should be made to explain how outputs are generated.

- Data Privacy: Protecting personal data used in training GenAI models is essential to maintain user trust.

- Monitor and Evaluate:

- Impact Assessments: Conduct ethical impact assessments to identify and mitigate potential ethical risks.

- Engage Stakeholders: Continuously engage with stakeholders to monitor the impacts of AI systems and make necessary adjustments.

By following these steps, organizations can effectively apply the “Ethics by Design” model to GenAI-based solutions, ensuring they are ethically sound and trustworthy.

Addressing AI Ethics Guiding Principles with Architectural and Design Recommendations

The following table outlines the Ethics by Design Model’s implementation recommendations as well as the related architectural and design patterns for Generative AI solutions:

| Recommended AI Ethics Guiding Principles | Implementation Recommendations (EU’s “Ethics by Design” Model) | Architectural and Design Patterns for Generative AI Solutions |

| The Human-First Maxim: AI-based solutions shall not produce content or actions that are detrimental or harmful to humans (e.g., customers, employees, and other stakeholders) and/or society at large. | • Conduct regular impact assessments to identify potential harm.

• Implement robust monitoring and feedback mechanisms. • Ensure transparency and explainability in AI decision-making processes. |

• Implement safety constraints and fail-safes.

• Use the Retrieval Augmented Generation (RAG) pattern to ensure accurate and contextually appropriate responses. • Incorporate human-in-the-loop mechanisms for critical decision-making. |

| The Ethical Imperative: AI solutions shall adhere to the ethical edicts outlined by government laws and enterprise ethical governing bodies, as well as its architects and curators, barring situations in which such edicts are at odds with the Human-First Maxim. | • Align AI development with legal and ethical standards.

• Establish clear governance frameworks and ethical guidelines. • Regularly update AI systems to comply with evolving regulations. |

• Use explainable AI (XAI) techniques to provide transparency.

• Develop modular AI components that can be easily updated. • Use fairness-aware machine learning algorithms.

|

| The Responsible Mandate: AI solutions should actively resist the propagation or magnification of biases, prejudices, and discrimination. They shall endeavor to discern, rectify, and mitigate such tendencies within their output. They shall maintain their integrity and protect themselves. | • Implement bias detection and mitigation strategies.

• Conduct regular audits and reviews of AI systems. • Foster a culture of continuous improvement and ethical awareness. |

• Implement role-based access control (RBAC) to ensure compliance.

• Implement continuous monitoring and logging. • Develop self-healing systems to maintain integrity. |

Safety Constraints and Fail-Safes

- Description: Implementing safety constraints and fail-safes ensures that AI systems operate within predefined safety boundaries and can handle unexpected situations without causing harm to human as defined by the Human-First guiding principle.

- Details:

- Approaches: Use formal verification methods to mathematically prove that the AI system adheres to safety constraints. Implement redundant systems and backup mechanisms to handle failures gracefully.

- Research Reference: proposes a framework for ensuring robust and reliable AI systems through guaranteed safe AI.

- Case Studies: Autonomous vehicles often use fail-safes to ensure they can safely stop or pull over in case of system failures.

Human-in-the-Loop Mechanisms

- Description: Incorporating human oversight in AI decision-making processes to ensure that critical decisions are reviewed and validated by humans.

- Details:

- Approaches: Use active learning, interactive machine learning, and machine teaching to involve humans in the training and decision-making processes.

- Research Reference: Human-in-the-loop machine learning: a state of the art provides a state-of-the-art summary of Human-in-the-loop machine learning.

- Case Studies: AI systems in healthcare often use human-in-the-loop mechanisms to ensure that diagnoses and treatment recommendations are reviewed by medical professionals.

Role-Based Access Control (RBAC)

- Description: Implementing RBAC ensures that only authorized individuals have access to specific AI functionalities and data, enhancing security and compliance.

- Details:

- Approaches: Assign roles and permissions based on the principle of least privilege. Use tools like Azure RBAC to manage access to AI resources.

- Research Reference: Role-based access control for Azure OpenAI Service provides an overview of Role-based access control for Azure OpenAI.

- Case Studies: Enterprises use RBAC to control access to sensitive AI models and data, ensuring compliance with regulations like GDPR or EU AI Act.

Explainable AI (XAI) Techniques

- Description: Techniques that make AI decision-making processes transparent and understandable to humans.

- Details:

- Approaches: Use self-interpretable models like decision trees and post-hoc explanations to provide insights into AI decisions.

- Research Reference: What is explainable AI? outlines Explainable AI (XAI) techniques and their importance in building trust and transparency.

- Case Studies: Financial institutions use XAI to explain credit scoring decisions to customers and regulators.

Fairness-Aware Machine Learning Algorithms

- Description: Algorithms designed to ensure that AI systems do not propagate or amplify biases and discrimination.

- Details:

- Approaches: Implement pre-processing, in-processing, and post-processing techniques to detect and mitigate biases.

- Research Reference: A Catalog of Fairness-Aware Practices in Machine Learning Engineering outlines a catalog of fairness-aware practices in machine learning engineering.

- Case Studies: Companies like Microsoft, IBM and Google have developed tools to detect and reduce bias in their AI systems.

Continuous Monitoring and Logging

- Description: Ongoing tracking and analysis of AI system performance to detect and address issues in real-time.

- Details:

- Approaches: Monitor key metrics like precision, accuracy, and resource consumption. Use tools like Splunk for real-time log monitoring.

- Research Reference: Why Continuous Monitoring is Essential for Maintaining AI Integrity summaries the importance of continuous monitoring in maintaining AI integrity.

- Case Studies: Numerous AI systems in production environments use continuous monitoring to ensure they remain accurate and reliable over time.

Self-Healing Systems

- Description: AI systems that can autonomously detect, diagnose, and correct their own errors to maintain optimal performance.

- Details:

- Approaches: Use adaptive learning, fault tolerance, and self-repairing code to create resilient AI systems.

- Research Reference: Beyond Intelligence: The Rise of Self-Healing AI summarizes the rise of self-healing AI and its applications.

- Case Studies: Self-healing AI is used in cloud computing environments to automatically recover from hardware and software failures.

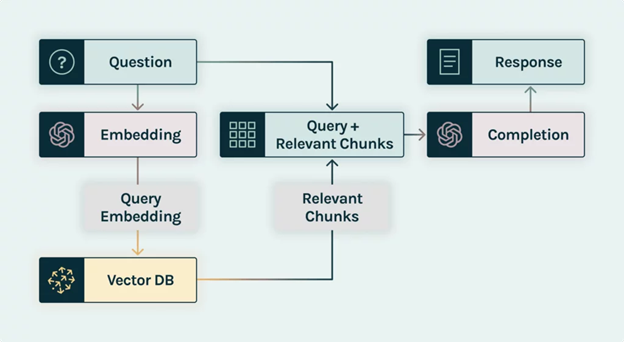

Retrieval-Augmented Generation (RAG)

- Description: Combines retrieval-based methods with generative models to produce accurate and contextually relevant responses.

- Details:

- Approaches: Use an information retrieval system to fetch relevant data and integrate it with a generative model to enhance responses. The following diagram illustrates a typical architecture model for implementing RAG with a GenAI large Language model (LLM).

- Research reference: A beginner’s guide to building a Retrieval Augmented Generation (RAG) application from scratch summaries on how to build a RAG application scratch. Retrieval Augmented Generation (RAG) in Azure AI Search outlines the RAG implementation in Azure AI Search.

- Case Studies: Customer support systems use RAG to provide accurate and contextually relevant answers to user queries.

Figure 1. RAG Architecture Pattern – Source: https://www.datastax.com/vector/search

Recommended Architecture – Based on AI Agentic Design Patterns and RAG

To implement various architectural and design patterns and models for addressing AI Ethics Guiding Principles is quite complicated given that some topics are still in the active research stage. Hence, we would need a modular and flexible architecture for not only meeting the current AI ethical requirements but also for anticipating future AI technology advancements, particularly future GenAI and Artificial General Intelligence (AGI) models.

What we would recommend is an architecture based on the AI Agentic Design patterns and RAG patterns.

Summary of AI Agentic Design Patterns with AutoGen

AI Agentic Design Patterns with AutoGen is a framework developed by DeepLearning.AI that focuses on building and customizing multi-agent systems. These systems enable agents to take on different roles and collaborate to accomplish complex tasks. These multi-agent collaboration patterns are particular suitable for implementing AI ethics architectural and design patterns outlined in the previous section.

Here are some key design patterns included in this framework:

Reflection

- Description: This pattern involves agents reflecting on their actions and decisions to improve their performance over time.

- Example: A blog post generation system where reviewer agents reflect on the content written by another agent to enhance quality.

Tool Use

- Description: Agents can call external tools to perform specific tasks, enhancing their capabilities.

- Example: A conversational chess game where agents use a tool to make legal moves on the chessboard.

Planning

- Description: Agents can plan and coordinate their actions to achieve complex goals.

- Example: A custom group chat where multiple agents collaborate to generate a detailed stock performance report.

Multi-Agent Collaboration

- Description: Multiple agents work together, each with distinct roles and capabilities, to accomplish tasks.

- Example: A customer onboarding experience where agents collaborate to provide a seamless and engaging process.

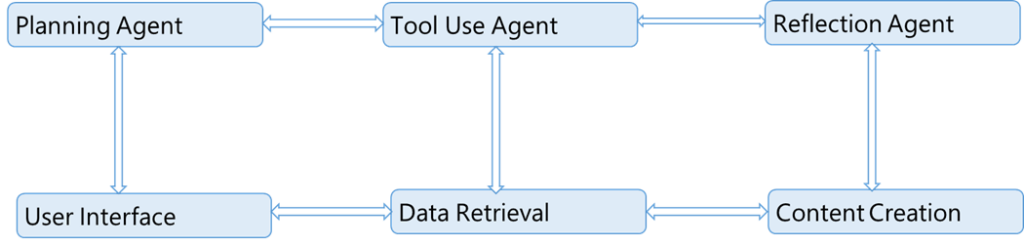

Figure 2. Simplified conceptual architecture diagram illustrating the multi-agent collaboration pattern

In this diagram:

- The Planning Agent coordinates the overall process.

- The Tool Use Agent interacts with external tools to perform specific tasks.

- The Reflection Agent reviews and improves the output.

- The User Interface allows users to interact with the system.

- The Data Retrieval component fetches relevant information.

- The Content Creation component generates the final output.

RAG pattern in conjunction with AI Agentic Design Patterns

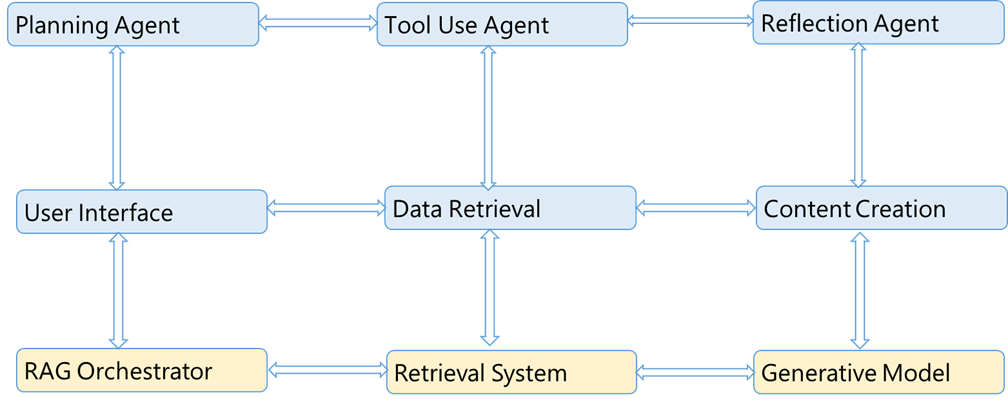

The following two simplified conceptual architecture diagrams illustrate how the RAG pattern can be integrated with AI Agentic Design Patterns to create robust and collaborative AI systems.

Figure 3. RAG with Multi-Agent Collaboration

Description:

- Planning Agent: Coordinates the overall process.

- Tool Use Agent: Interacts with external tools to perform specific tasks.

- Reflection Agent: Reviews and improves the output.

- User Interface: Allows users to interact with the system.

- Data Retrieval: Fetches relevant information.

- Content Creation: Generates the final output.

- RAG Orchestrator: Manages the flow of data and interactions between components.

- Retrieval System: Retrieves relevant information from a knowledge base.

- Generative Model: Generates responses based on retrieved information.

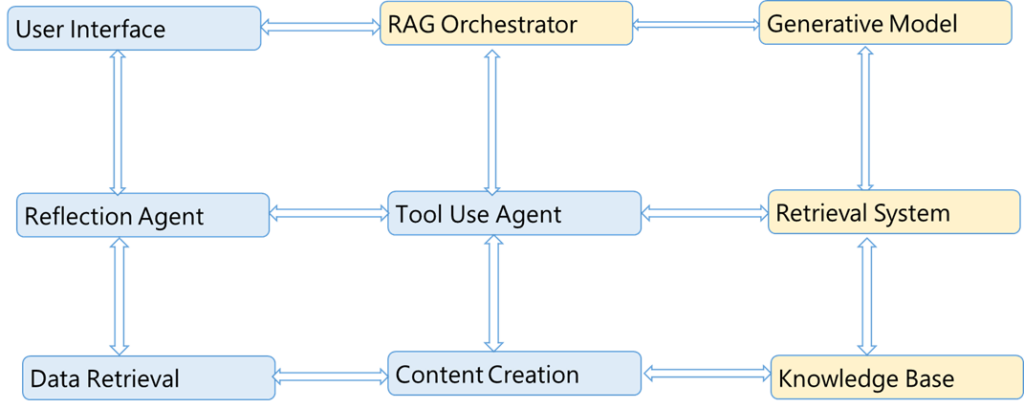

Figure 4. RAG with Reflection and Tool Use

Description:

- User Interface: Allows users to interact with the system.

- RAG Orchestrator: Manages the flow of data and interactions between components.

- Generative Model: Generates responses based on retrieved information.

- Reflection Agent: Reviews and improves the output.

- Tool Use Agent: Interacts with external tools to perform specific tasks.

- Retrieval System: Retrieves relevant information from a knowledge base.

- Data Retrieval: Fetches relevant information.

- Content Creation: Generates the final output.

- Knowledge Base: Stores the information used for retrieval. In the case of addressing AI Ethics guiding principles, this knowledge base should contain a set of knowledge or facts, such as legal or regulatory requirements, enterprise AI ethics policies and guidelines, specific domain (e.g., healthcare industry) know-how or business rules, security and safety rules, etc.

These diagrams illustrate how the RAG pattern can be integrated with AI Agentic Design Patterns to create robust and collaborative AI systems. For more detailed information, you can explore the DeepLearning.AI course on AI Agentic Design Patterns with AutoGen and the Azure AI Search documentation on RAG.

Additional Architectural Recommendations for End-to-End Process

So far, we have outlined recent research results and various architecture and design patterns or practices for addressing AI Ethics Guiding Principles. To make it more practical for architects and builders of AI solutions, we need to take a holistic view on the end-to-end process on where and when to use which architecture/design pattern or practice.

Knowledge and Facts Based

Enterprise AI systems require a solid foundation of knowledge and facts to operate effectively and ethically. This entails:

- Identifying and codifying institutional knowledge: Businesses often possess invaluable, tacit knowledge embedded within their processes and people. This knowledge must be extracted, formalized, categorized, and integrated into the AI system.

- Creating a centralized knowledge graph: A knowledge graph can structure and interconnect diverse information sources, enabling the AI to reason, infer, and make informed decisions.

- Leveraging external knowledge bases: Incorporating publicly available knowledge bases such as domain-specific databases can enrich the system’s understanding of the world. For example, Unified Medical Language System (UMLS) is an authoritative source for key terminology, classification, and coding standards in the healthcare industry.

Example: A healthcare AI system could benefit from a knowledge graph that links medical diagnoses, treatments, patient data, and clinical guidelines, facilitating accurate and evidence-based decision-making.

Reference: outlines Knowledge-Centric Design Pattern, which provides background information in terms of categorizing, organizing, and managing a knowledge base.

Establishing a Knowledge Base for Evaluation and Monitoring

A dedicated knowledge base is crucial for assessing and tracking the AI system’s performance against the three guiding principles.

- Defining evaluation metrics: Specific metrics should be established to measure the system’s adherence to human-centric, ethical, and responsible AI principles.

- Tracking performance over time: Regular monitoring and analysis of the system’s behavior can identify potential issues and areas for improvement.

- Implementing explainability features: The system should be able to provide clear and understandable explanations of its decisions, enhancing trust and accountability.

Example: An AI system used in recruitment could be evaluated based on metrics like fairness, diversity, and transparency in decision-making. A knowledge base would track these metrics over time and provide insights into the system’s performance.

Pre-processing for Responsible AI and Bias Mitigation

Pre-processing data (e.g., training data set) is essential for ensuring that the AI system is trained on unbiased data and produces fair outcomes.

- Data cleaning and preprocessing: Removing noise, inconsistencies, and biases from the data is crucial.

- Feature engineering: Carefully selecting and transforming features can significantly impact the AI model’s performance and fairness.

- Bias detection and mitigation: Employing techniques like fairness metrics, adversarial training, and bias amplification can help identify and address biases in the data and model.

Example: An AI system used for loan approval should undergo rigorous pre-processing to remove biases related to gender, race, or socioeconomic status.

Processing AI Algorithms, Models and Data: Monitoring with Agents

Continuous monitoring of the AI system’s behavior is vital for detecting and preventing deviations from the guiding principles.

- Developing monitoring agents: AI-powered agents can be designed to track the system’s performance, identify anomalies, and trigger alerts.

- Implementing human-in-the-loop systems: Human experts can provide oversight and intervene when necessary, ensuring that the AI system operates within acceptable boundaries.

Example: An AI system used in autonomous vehicles could employ monitoring agents to detect potential safety hazards and alert human drivers if needed.

Post-processing: Evaluation and Filtering

The final output of the AI system must align with the three guiding principles.

- Output evaluation: Assessing the system’s output against predefined criteria can help identify potential issues.

- Filtering and refinement: Modifying the output to ensure compliance with ethical and legal standards is essential.

- Human-in-the-loop verification: Human experts can review and approve the final output before it is deployed.

Example: An AI system generating content should be filtered to remove harmful, biased, or discriminatory content.

By carefully considering these architectural recommendations and incorporating them into the development of enterprise AI solutions, organizations can significantly increase the likelihood of creating AI systems that are human-centric, ethical, and responsible.

Conclusion

In this two-part AI Ethics series, we emphasize the importance of enterprises adopting a set of ethical and safety AI guiding principles. We proposed three recommended AI Ethics Guiding Principles, which are inspired by Isaac Asimov’s famous three laws of robotics and recent AI research. In this companion technical article, we provided a list of recommended architectural and design patterns or models:

· Ethics by Design model proposed by the EU,

· Applying the Ethics by Design model for Generative AI (GenAI) based solutions,

· Recommended architectural patterns based on both multi-agent collaboration and RAG patterns,

· Additional architectural patterns and practices for end-to-end Process.

Given the rapid advancement of generative AI and related technologies, it is imperative for enterprises to embrace these guiding principles along with the proposed architectural recommendations to ensure responsible and ethical AI deployment.

References

- Reid Blackman (October, 2020). Harvard Business Review. A Practical Guide to Building Ethical AI. A Practical Guide to Building Ethical AI (hbr.org)

- European Commission (November, 2021). Ethics by Design and Ethics of Use Approaches for Artificial Intelligence. ethics-by-design-and-ethics-of-use-approaches-for-artificial-intelligence_he_en.pdf (europa.eu)

- European Parliament (June, 2024). EU AI Act : first regulation on artificial intelligence. EU AI Act: first regulation on artificial intelligence | Topics | European Parliament (europa.eu)

- John Nosta (October, 2023). Asimov’s Three Laws of Robotics, Applied to AI. Asimov’s Three Laws of Robotics, Applied to AI | Psychology Today United Kingdom

- David Dalrymple et al. (May, 2024). Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems. Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems (arxiv.org)

- Eduardo Mosqueira-Rey et al. (August, 2022). Human-in-the-loop machine learning: a state of the art. Human-in-the-loop machine learning: a state of the art | Artificial Intelligence Review (springer.com)

- Microsoft (August, 2024). Role-based access control for Azure OpenAI Service. Role-based access control for Azure OpenAI – Azure AI services | Microsoft Learn

- IBM (2024). What is explainable AI? What is Explainable AI (XAI)? | IBM

- Ellen Glover (June., 2023). Explainable AI, Explained. What Is Explainable AI? | Built In

- Gianmario Voria et al. (2021). A catalog of fairness-aware practices in machine learning engineering. 16683v1 (arxiv.org)

- Haziqa Sajid (April, 2024). Why Continuous Monitoring is Essential for Maintaining AI Integrity. Why Continuous Monitoring is Essential for Maintaining AI Integrity – Wisecube AI – Research Intelligence Platform

- Futurism Technologies (January, 2024). Beyond Intelligence: The Rise of Self-Healing AI. Beyond Intelligence: The Rise of Self-Healing AI (futurismtechnologies.com)

- ai (2024). A beginner’s guide to building a Retrieval Augmented Generation (RAG) application from scratch. A beginner’s guide to building a Retrieval Augmented Generation (RAG) application from scratch (learnbybuilding.ai)

- Microsoft (August, 2024). Retrieval Augmented Generation (RAG) in Azure AI Search. RAG and generative AI – Azure AI Search | Microsoft Learn

- AI (2024). DeepLearning.AI – AI Agentic Design Patterns with AutoGen. DeepLearning.AI – AI Agentic Design Patterns with AutoGen

- Walson Lee (April, 2024). Knowledge-Centered Design for Generative AI in Enterprise Solutions. Knowledge-Centered Design for Generative AI in Enterprise Solutions – Architecture & Governance Magazine (architectureandgovernance.com)