Organisations are deploying multiple AI initiatives that have the potential to transform operations, drive innovation, and enhance competitiveness. Gartner says, more than 80% of enterprises will have used Generative AI APIs or deployed Generative AI-Enabled applications by 2026. Without a robust Governance, Risk, and Compliance (GRC) framework spanning these diverse projects, there is a risk of ethical lapses, compliance breaches, and unintended AI behaviours.

Let’s look at some of these aspects that form a comprehensive, organisation-level GRC strategy ensures that all AI initiatives remain responsible, transparent, and aligned with business objectives.

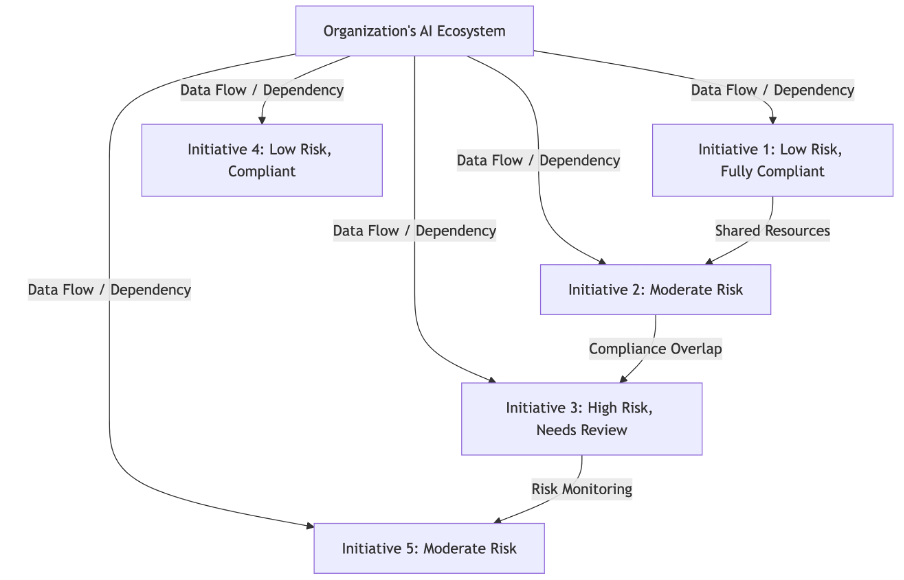

Assessing the Organisation’s AI Posture

The first step to an effective GRC strategy is to understand the overall AI estate at an organisation.

Understanding the overall AI estate involves mapping every AI initiative across the organisation, including the systems in use, data sources, deployment environments, and the interdependencies among these components. Let’s call this as initiative inventory.

This comprehensive overview of the inventory is essential for pinpointing vulnerabilities, compliance gaps, and ethical challenges, and forms a critical baseline for risk management and effective governance.

For example, the graph above maps various AI initiatives and labels their associated risks and compliance statuses. This visual demonstration reveals the interdependence among initiatives through shared data flows and resources, while also flagging high-risk projects that require further review.

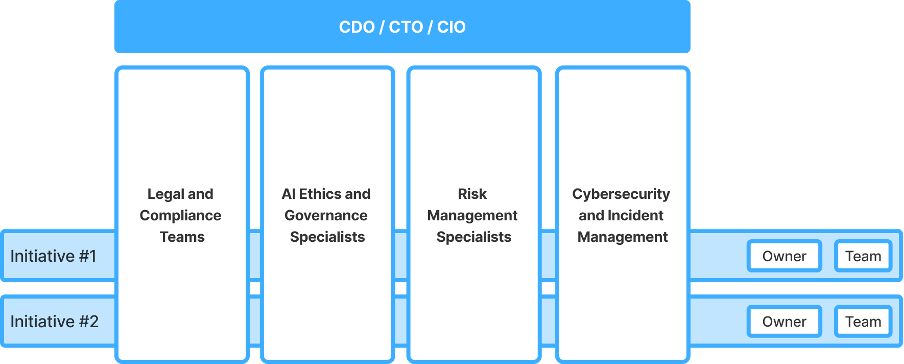

Defining Roles and Responsibilities at Scale

Through this inventory, it is now important to identify key roles and drive a common set of accountabilities across all AI initiatives in the inventory. Clearly delineated roles ensure that every stakeholder—whether part of centralised teams or spread across business units—understands their specific responsibilities, which streamlines decision-making and minimizes ambiguity.

Ensuring third-party providers, vendors and partners, that are contributing to the initiative, adhere to the organisation’s governance standards is equally important.

Key roles should include,

| Role | Responsibility |

| CDO / CTO / CIO | Provide strategic oversight and ensure organisation-wide accountability. |

| Initiative Owner | Maintain overall ownership of development, risk management, compliance, and ethical aspects. |

| Implementation Teams | Develop and deploy AI systems in alignment with established governance standards. |

| Legal and Compliance Teams | Ensure regulatory compliance and support audit processes. |

| AI Ethics and Governance Specialists | Oversee ethical considerations and lead policy development for responsible AI practices. |

| Risk Management Specialists | Conduct risk assessments and design effective mitigation strategies. |

| Incident Management Team | Identify potential threats, respond to breaches, and implement preventive measures. |

Developing a Risk and Compliance Strategy

Managing risks across multiple AI initiatives is the cornerstone of a resilient GRC framework. Aligning AI development with organizational goals while addressing high-impact risks allows for effective resource allocation and proactive policy creation.

Organizations should conduct a comprehensive review of their AI inventory—assessing risks like potential data breaches, security vulnerabilities, and algorithmic bias, verifying compliance with regulations such as GDPR and CCPA, and evaluating models against the organisation’s core values.

For example, for a healthcare organisation, the key focus should be to review access protocols to prevent unauthorized use of patient data, which could lead to serious privacy breaches, and meet GDPR for EU patients’ data or HIPPA for US.

For an organisation in social media space, focus on detecting hate speech, bullying or misinformation is essential, and then ensuring the layers of initiatives consistently support GDPR’s right-to-be-forgotten and CCPA’s consumer opt-out provisions.

A retail organisation, that focuses on personalised recommendations, should ensure personally identifiable information (PII) or other sensitive customer data isn’t used in personalisation.

An effective risk assessment should evaluate if such compliance and ethical practices are adhered to across initiatives.

Operationalizing AI Governance

Converting governance policies into practical, day-to-day practices is key to making them effective across all AI initiatives. Embedding continuous monitoring, auditing, and transparent reporting into operational workflows transforms high-level policies into actionable safeguards.

Organizations should deploy AI governance platforms that monitor performance in real time, perform regular audits, and maintain detailed audit trails to clarify decision-making.

Additionally, operationalizing governance requires promoting transparency and accountability by standardizing methods to explain model decisions, maintain comprehensive records of development and deployment, and periodically share performance metrics and bias mitigation efforts with the stakeholders. For example, with latest reasoning models in LLM space, explaining model decisions has becoming easier.

Data Governance and Privacy Management

Imagine a search that uncovers salary information of your employees because the data wasn’t properly marked as confidential. This lapse in data tagging can lead to unauthorized access to sensitive financial details, potentially compromising employee privacy and exposing the organisation to reputational and competitive risks.

Centralising the data access and processing into a centralised data hub is the first step to ensure privacy and regulatory compliance.

Once the data has been centralised, appropriate quality checks that the data is accurate, complete and free from bias are essential to prevent AI errors. The data pipelines should have relevant filter to remove data that doesn’t meet the quality standards.

Next step to this process is application of necessary policies to enforce redaction of information, tokenization, apply data tagging, maintaining hierarchies of data (or tags) which then are leveraged in AI / LLM initiatives that adhere to Responsible AI principles and Ethical use of systems and data.

Cybersecurity and Model Security

Following the deployment of the AI initiative into production, with appropriate data and privacy controls, workflows, and RACIs, these AI initiatives remain vulnerable to technical vulnerabilities and threats from an end-user perspective. End-users may inadvertently expose sensitive information or be exploited through social engineering tactics that bypass technical safeguards.

Proactive implementation of sanitizing user inputs to block malicious content, encrypting both stored and transmitted data, and enforcing strict access controls is a must.

Equally, focus on model security by employing secure authentication practices, rigorously validating training data to thwart poisoning attacks, and incorporating adversarial training to enhance model robustness is a must.

If organisations have the capability, a red teaming exercise to simulate attacks to uncover and remediate vulnerabilities would be advantageous as well.

Iterating and Improving Governance

Once the governance framework is established into practice, an ongoing refinement is vital to staying ahead of evolving risks, technologies, and regulations.

Frequent review cadences with various departments, coupled with regular training and awareness initiatives helps the team to adapt the policies in response to emerging threads or changes in compliance standards.

This continuous cycle of improvement ensures that the governance framework is effective and resilient, and organisations can achieve responsible, sustainable AI adoption at scale.