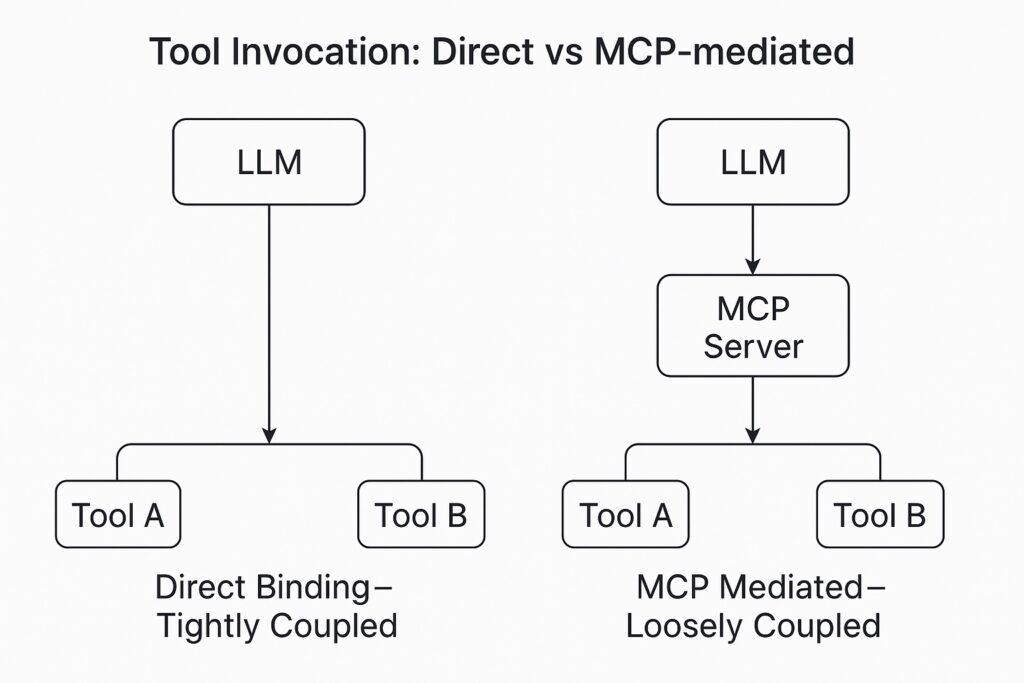

While modern Large Language Models (LLMs) are already equipped with tools or function-calling capabilities to access external resources like databases and custom business logic, introducing a Model Context Protocol (MCP) adds a crucial architectural benefit.

In traditional setups, tools or functions are often tightly coupled to the invoking services or applications. This creates dependencies that are hard to manage, scale, or replace without affecting the overall architecture.

The MCP server introduces an intermediary abstraction layer between the LLM and the tools. This architectural shift decouples the LLM from the service implementations, allowing for:

- Loosely coupled architecture that aligns with modern software design principles

- Flexibility to include, remove, or update services without impacting the LLM’s core interaction logic

- Ease of service substitution — you can swap a service with another using the same interface or name without requiring changes to the LLM configuration

By adopting MCP, teams can build more scalable, maintainable, and adaptable AI-based systems.

Let’s illustrate the concept through a simple Dummy Weather Bot:

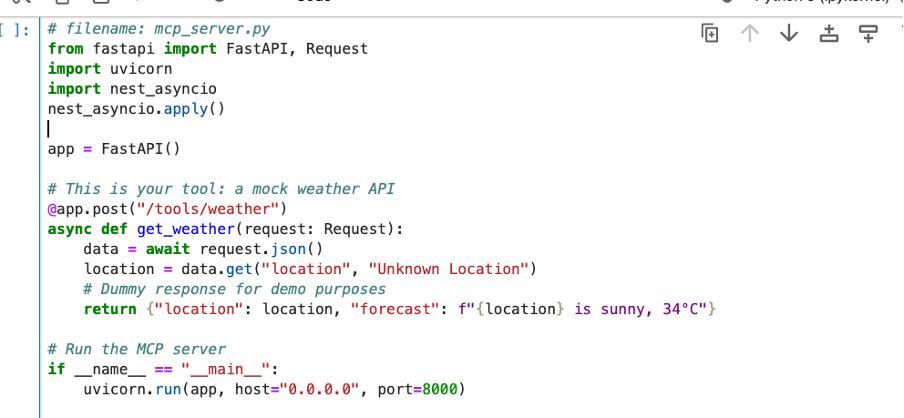

Example of a MCP Server

What does this code do?

- This script sets up a basic MCP serverusing FastAPI, a lightweight web framework for building APIs.

- It defines a tool endpoint /tools/weatherwhich acts like a mock weather API that returns a dummy forecast based on the location received in a request.

- When GPT or any client sends a POST request with a location (like “Chennai”), the server responds with a fake weather report for that location.

- The server starts running on port 8000and listens for requests — this is how GPT communicates with real-world tools via the MCP layer.

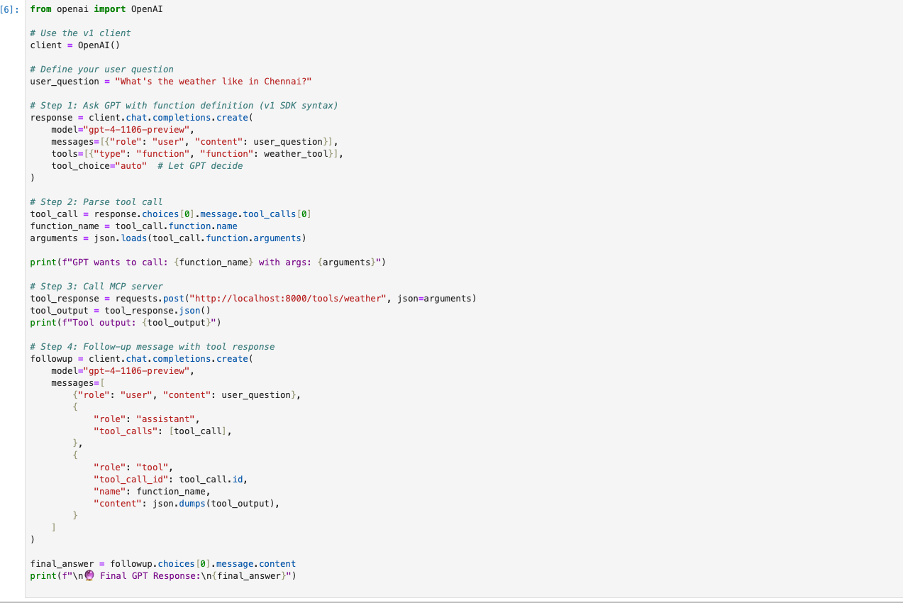

Example of a MCP Client

What does the code do?

- The user asks a question (e.g., about the weather in Chennai), and the GPT model is prompted with this question along with a tool definition (weather_tool).

- GPT decides to use the tool and returns the function it wants to call along with the arguments.

- The code sends this function call to an MCP server (running locally) which processes the request and returns the tool’s actual response (e.g., weather info).

- This response is then sent back to GPT as a follow-up input, helping it to generate a final, informed answer.

- In essence, the code acts as a bridge between GPT, the MCP server, and external tools, making GPT smarter through real-time data.

Execution result

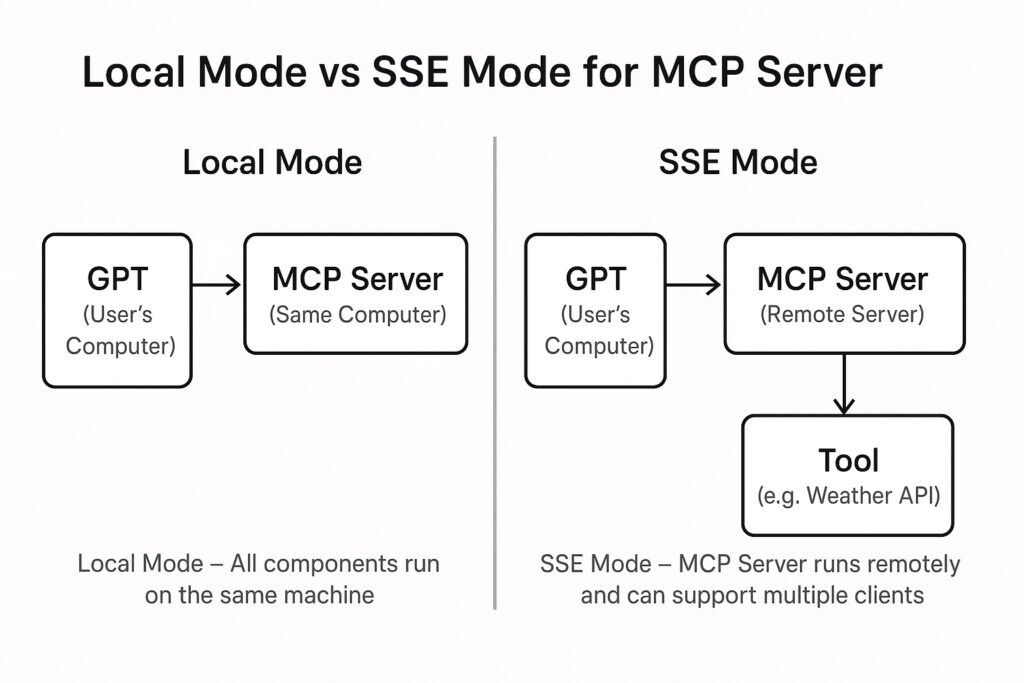

Variation of MCP Server

- Local Mode (STDIO)– The MCP Server runs on your own computer. This is useful for testing, development, or small-scale use.

- SSE Mode (Server-Sent Events)– The MCP Server runs on a remote server (like a cloud or another system). This is better when many users or systems need to access it, especially in real-time scenarios.

Additional Differences

- Communication Type: SSE uses a persistent, one-way stream, whereas STDIO is generally used for short, one-time input/output interactions.

- Data Flow: In SSE, the server pushes data in real-time, while STDIO responds to specific user input or system requests and doesn’t maintain a continuous data flow.

Thus, SSE is ideal for scenarios that require real-time updates, while STDIO is more suited to static, request-based output operations.

Challenges with MCP server

There are many MCP servers available on the internet that are ready to use. The largest collection is commonly found listed on https://mcp.so. However, it’s crucial to validate any external server before integrating it into your system, as unverified servers can act as trojan horses, potentially hiding malicious code that could leak sensitive data or compromise system integrity. One way to safeguard against such risks is to use servers marked with a “Verified” badge from trusted platforms or communities. For example, servers verified and listed by reputable GitHub repositories, AI tool registries, or enterprise marketplaces provide an added layer of assurance.

While there’s no official universal badge standard yet for MCP servers (like the blue check on social media), some platforms and open-source communities are beginning to adopt “Verified” tags or badges through:

- Check the MCP server’s GitHub source codefor transparency.

- Look for maintainer identity(e.g., verified org or contributor).

- Check community trust signals like stars, forks, issue activity, or references in trusted AI forums.

Difference between MCP and A2A

- Model Context Protocolmanages how an AI model interprets and adjusts based on contextual information, enhancing its responses within specific environments.

- Agent-to-Agent Protocolgoverns communication between autonomous agents, enabling collaboration and data exchange for joint tasks.

- Model Context Protocolis used for improving a model’s contextual awareness within a single system, while

- Agent-to-Agent Protocolis used for interaction and cooperation between multiple independent agents or systems.

Shammy Narayanan is a chief solution architect at Welldoc. With 10x cloud certified he is deeply passionate about AI, Data and Analytics. He can be reached at shammy45@gmail.com