By James Wilt, Distinguished Architect and Walson Lee, Senior Architect

Involving engineering teams across the value stream in the discovery and adoption of new technologies promotes advancement of new ways of working and accelerates innovation by leveraging discoveries.

What is a Micro-Experiment?

- “Experiment-Driven Development (EDD) is a scientific, fact-based approach to software development using agile principles.” – Understanding Experiment-Driven Development

- “Hypothesis-driven development is based on a series of experiments to validate or disprove a hypothesis in a complex problem domain where we have unknown-unknowns. We want to find viable ideas or fail fast.” – Why hypothesis-driven development is key to DevOps

- “Experiment-driven product development (XDPD) is a way of approaching the product design and development process so that research, discovery, and learning—asking questions and getting useful, reliable answers—are prioritized over designing, and then validating, solutions.” – Experiment-Driven Product Development: How to Use a Data-Informed Approach to Learn, Iterate, and Succeed Faster November 21, 2019, Paul Rissen, Apress

What a Micro-Experiment is not:

- A Proof of Concept (PoC) is generally one approach that demonstrates feasibility, however, it lacks the rigor and confidence provided by the many approaches examined in a micro-experiment.

- A Minimal Viable Product (MVP) provides enough features to attract early-adopter customers and validate a product idea while a micro-experiment’s scope is limited to a single hypothesis/concept under intense evaluation.

Why Micro-Experiment?

- Micro-Experiments allow teams to ask and answer questions in a structured, measurable process. Since ideas are validated by hypotheses, teams avoid the testing of ideas simply to validate individual egos or hunches.

- While agile methodologies dictate that value to the end-user is the primary goal, the hypothesis-driven approach of Micro-Experiments forces teams to define value objectivity through validated learning and not assumption alone.

- Efficiency is increased by building an intentional/purposeful Micro-Experiment instead of focusing on cool/superfluous features that provide little benefit to the end-user.

- Teams leading with Micro-Experiments accelerate time to value (by up to 50%) with increased confidence. This is because good micro-experiments thoroughly vet failure paths (fail-fast) that ensure chosen paths can execute with greater confidence.

How to Micro-Experiment:

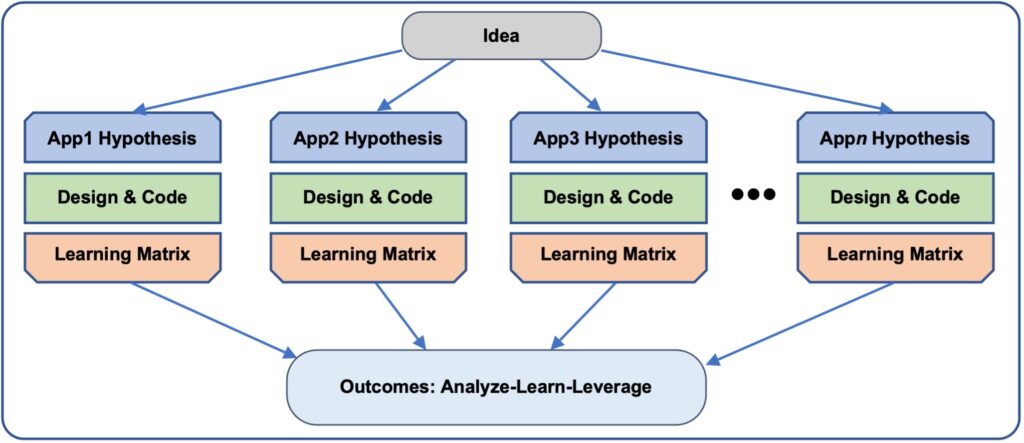

A key differentiation of Micro-Experiments is they are based on learning through rigorous examination using many approaches. This results in zero to many viable solutions that can be selected by their appropriateness to specific criteria.

The first rule of Micro-Experiments is the code lives and dies in the sandbox. The second rule of Micro-Experiments is THE CODE LIVES AND DIES IN THE SANDBOX. Period.

Idea

- Identify the underlying purpose and goals of the Micro-Experiment.

- Clarify what is in and what is out of scope.

- Keep it crisp & concise.

Example: Evaluate the performance, cost, and scaling factors of cloud compute options for web apps.

- In Scope: AWS, Azure, GCP

- Out of Scope: On-premises Kubernetes

Approaches & Hypotheses

- One approach is a Proof of Concept that can easily fall into scope-creep. Avoid this. Instead, consider multiple viable and nonviable approaches to deepen learning takeaways.

- For each approach, formulate a hypothesis that defines the smallest intentional/purposeful outcome that will prove or disprove it. Connect the hypothesis to customers, problems, solutions, value, or growth where possible.

- A hypothesis should be simple & direct, “By doing this, we expect that to happen which benefits by why…“

Example: By using Azure App Services (code deployment), we expect the developer to require little-to-no knowledge around app hosting & operations which will allow us to focus on exceeding customer expectations.

Design & Code

- Decide how you should implement each approach: same team for all approaches vs. different team per approach. Weigh the pros & cons against team capability & maturity.

- Leverage patterns & best practices. Platform/product vendors generally have an abundance available to choose from.

- Keep the design & code concise & simple focused solely on the outcome to be measured.

- A Micro-Experiment need not adhere to the code disciplines and rigor that production code requires (e.g., no 12-Factor App here).

- A half day to a day is a common goal for implementing an approach.

- Design & code each approach and then measure progress based on selected criteria and validated learning (matrix below).

- A Build/Measure/Learn loop is recommended.

Learning Matrix

Capture all the significant and insignificant details of each approach in a matrix. The Learning Matrix is for collecting raw data, not judging (that comes later in Outcomes). Failures are equally if not more important than successes.

| Attribute | Approach 1 – name | Approach 2 – name | Approach … |

| Hypothesis | |||

| Architecture-Design | |||

| Spin-up to Dev Time/Difficulty | |||

| Pipeline & Time to Deploy | |||

| Security Coverage & Complexity | |||

| Cost | |||

| Performance | |||

| Scale | |||

| Resiliency & Availability | |||

| Pain Points | |||

| When to Use & Avoid |

The attributes of the Learning Matrix can shift over time, however, the following represent the core:

- Hypothesis – provide the hypothesis for the given approach.

- Architecture-Design – capture & display any patterns and artifacts relevant to understanding & communicating the approach.

- Spin-up to Dev Time/Difficulty – capture the effort and time to become productive in the environment & platform used by the approach.

- Pipeline & Time to Deploy – capture/summarize the steps, effort, and time to deploy the approach into an active sandbox/test state.

- Security Coverage & Complexity – when applicable (almost always), capture the approach’s impact (positive and negative) to security practices and any increase or decrease to complexity.

- Cost – capture cost of the approach at all or as many levels of scale & utilization possible – actual measured costs are best – use “calculators” when actual costs cannot be measured directly. Extrapolate data to estimate costs at production scale. Are there any circumstances where costs will spike? Capture them!

- Performance – leverage built-in platform monitoring and test tools to capture approach performance and duration metrics: avg, min, med, max, percentiles p90 & p95.

- Scale – utilize the “Boeing wing stress test” method (stress it until it breaks) to determine the upper limits of scale-out and scale-up for the approach. Never test scale to a target goal – test well beyond targets until degradations are reached.

- Resiliency & Availability – capture the approach’s robustness by calculating the elemental metrics that determine what SLAs can be achieved.

- Pain Points – capture any process, tool, dependency, limiting, and anecdotal hurdles and roadblocks encountered implementing the approach.

- When to Use & Avoid – clearly & concisely capture and articulate when the approach should be used and when it should be avoided. Start each statement with, “Use when…” or “Avoid when…” incorporating learnings from prior attributes identifying the conditions for this guidance.

Outcomes

Overall, Micro-Experiments should effectively Analyze, Learn, & Leverage. What were the observations? How might these learnings be leveraged? Consider the following when compiling Micro-Experiment Outcomes:

- Proving a Hypothesis is a Win!

- Disproving a Hypothesis is equally a Win! Knowing what fails and how to make it fail is often among the greatest lesson(s) learned.

- By learning what fails, teams can proceed with high confidence when selecting approaches to pursue.

- Leveraging “When to Use/Avoid” allows there to be necessary variability giving Dev teams multiple choices to utilize as surrounding conditions, criteria, and requirements evolve over time. Starting with one approach often evolves to another when thresholds of performance and/or cost are realized.

Time Commitment & Value Return?

Teams that leverage Micro-Experiments & EDD typically will dedicate 1-2 weeks to execute their approaches and analyze their outcomes. The following represents the results experienced:

- Teams leveraging learnings when applying this concept see a reduced time-to-production by up to 50%. It takes simply takes far fewer sprints.

- Teams realize fewer “gotchas” as this immersive and exhaustive learning includes becoming an expert in failure paths as well as successful paths.

- Teams move forward with confidence as they’ve learned what breaks, when it breaks, how it breaks, and why it breaks.

- Teams can quickly pivot to different approaches as real-world factors and conditions change over time.

- Hands-on Micro-Experiments land learnings much deeper and teach teams to rely on empirical data & experience from the scientific method over text-book comparisons.

What are you waiting for? Go, Micro-Experiment and be the true Computer Scientist you are meant to be!