By Bala Kalavala, Chief Architect & Technology Evangelist

The Mixture-of-Experts (MoE) architecture is a groundbreaking innovation in deep learning that has significant implications for developing and deploying Large Language Models (LLMs). In essence, MoE mimics the human approach to problem-solving by dividing complex tasks among a team of specialized experts. Instead of relying on a single monolithic model, MoE utilizes a collection of smaller, specialized “expert” networks, each trained on a specific subset of data or a particular domain.

Unlike traditional monolithic models, MoE architectures consist of multiple specialized sub-models (experts) that work together to make predictions. Each expert handles a subset of the input data, and a gating mechanism determines which experts should be activated for a given input. This approach allows MoE models to achieve high performance while maintaining computational efficiency.

Imagine a team of expert consultants, each specializing in a particular domain. When faced with a complex question, it’s often more practical to consult the relevant experts rather than a single generalist. MoE mimics this principle. In the context of LLMs, “experts” are more minor, specialized language models, each trained on a specific subset of the data or a particular language style. For instance, one expert might excel in generating creative text, while another specializes in translating languages. A crucial element is the “gating network.” This network acts as a traffic director, determining which expert LLMs should be activated for a given input. For example, if the input is a poem, the gating network might activate the expert specializing in creative text.

A crucial component of MoE is the gating network, which acts as a traffic director, intelligently routing inputs to the most suitable expert(s) for processing. This dynamic selection of experts not only improves efficiency by reducing computational overhead but also enhances model performance by allowing each expert to focus on its area of expertise. MoE models are inherently sparse because only a subset of experts is activated for each input. This sparsity leads to significant computational savings, as the model does not need to compute the outputs of all experts for every input. On the same breath, MoE architectures are highly scalable; by increasing the number of experts, the model capacity can be expanded without a proportional increase in computational cost. This makes MoE models particularly suitable for large-scale tasks.

The application of MoE to open-source LLMs offers several key advantages. Firstly, it enables the creation of more powerful and sophisticated models without incurring the prohibitive costs associated with training and deploying massive, single-model architectures. Secondly, MoE facilitates the development of more specialized and efficient LLMs, tailored to specific tasks and domains. This specialization can lead to significant improvements in performance, accuracy, and efficiency across a wide range of applications, from natural language translation and code generation to personalized education and healthcare.

The open-source nature of MoE-based LLMs promotes collaboration and innovation within the AI community. By making these models accessible to researchers, developers, and businesses, MoE fosters a vibrant ecosystem of experimentation, customization, and shared learning. This collaborative approach accelerates development, driving advancements in LLM technology and democratizing access to cutting-edge AI capabilities.

| Benefits of MoE Architecture | Drawbacks of MoE Architecture |

| MoE models are computationally efficient because they activate only a subset of experts for each input. This reduces the overall computational burden compared to traditional models, which process all inputs through the entire network. | MoE models are more complex to design and train than traditional models. The gating mechanism and the need to balance expert specialization add additional layers of complexity. |

| A significant advantage of MoE models is their ability to scale model capacity by adding more experts without a proportional increase in computational cost. This makes them well-suited for large-scale applications. | MoE models can be challenging to train due to the dynamic nature of the gating mechanism. Ensuring that all experts are trained effectively and that the gating network makes optimal decisions can be difficult. |

| Expert specialization allows MoE models to handle diverse and complex data more effectively. Each expert can focus on a specific input type, leading to better overall performance. | Experts’ specialization can lead to overfitting, especially if the gating mechanism does not generalize well to unseen data. Regularization techniques are often required to mitigate this risk. |

| MoE architectures are flexible and can be adapted to various tasks, including natural language processing, computer vision, and reinforcement learning. Their modular nature allows for easy customization and extension. | While MoE models are computationally efficient during inference, they can require significant resources during training, mainly when dealing with large numbers of experts. |

Table: Pros and Cons of MoE Architecture

Top Open-Source frameworks that leverage MoE Architecture

The Switch Transformer, a groundbreaking model from Google, exemplifies the power of MoE by replacing dense feed-forward layers with sparse MoE layers within the Transformer architecture. This innovation allows the model to scale to billions of parameters while maintaining computational efficiency by activating only a small subset of experts for each input. The Switch Transformer has demonstrated state-of-the-art performance on various natural language processing tasks. However, its training complexity and significant resource requirements can present challenges for deployment.

GShard, another Google-developed MoE model specifically designed for large-scale distributed training. By leveraging MoE layers, GShard enables efficient scaling across numerous devices, making it suitable for training models with thousands of experts. While highly scalable and flexible for various tasks, GShard’s distributed training setup adds complexity and demands substantial computational resources.

DeepSpeed-MoE, an open-source implementation from Microsoft, is part of the DeepSpeed library, renowned for its focus on efficient large-scale model training. This framework incorporates optimizations for both training and inference, ensuring high efficiency while scaling to large numbers of experts. DeepSpeed-MoE seamlessly integrates with other DeepSpeed optimizations, further enhancing its performance. However, the steep learning curve of the DeepSpeed library and the resource-intensive nature of training can pose challenges for some users.

FastMoE, an open-source framework from Meta, prioritizes ease of use and seamless integration with popular deep learning libraries like PyTorch. Its user-friendly API and performance optimizations make it a valuable tool for researchers and developers. While highly flexible and adaptable, FastMoE may have limitations in scalability compared to other MoE frameworks when dealing with huge models. Furthermore, as a relatively new framework, FastMoE might have limited community support compared to established libraries.

OpenMoE, developed by ColossalAI, aims to simplify the development of MoE models. 1 It addresses the challenges posed by the increasing size of deep learning models, particularly the memory constraints of single GPUs. OpenMoE offers a unified interface that supports pipeline, data, and tensor parallelism techniques to scale model training to distributed systems. By incorporating the Zero Redundancy Optimizer (ZeRO), OpenMoE maximizes memory usage efficiency.

DeepSeek, a relatively new entrant in the MoE framework landscape, prioritizes efficient training and inference of large-scale MoE models. A key focus of DeepSeek is cost-effectiveness, aiming to minimize computational costs and resource consumption. This emphasis on resource optimization includes techniques like efficient memory management and distributed training strategies, making it suitable for training and deploying large-scale AI models in resource-constrained environments.

As we can note several open-source frameworks leverage the Mixture-of-Experts (MoE) architecture, each with unique strengths. DeepSpeed-MoE, from Microsoft, excels in efficiency and scalability, seamlessly integrating with other DeepSpeed optimizations. FastMoE, developed by Meta, prioritizes ease of use and integrates with popular deep learning frameworks like PyTorch, making it accessible to a wider range of developers.

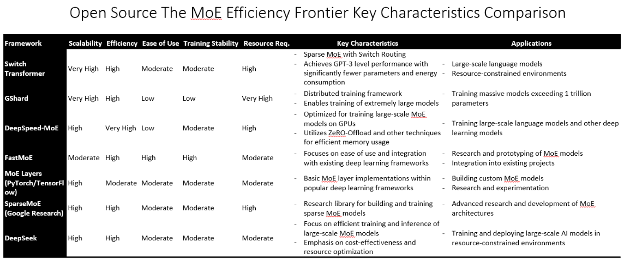

Figure: Comparison of Open-Source Framework MoE Architectural Benefits and Negatives

DeepSeek, a relatively new framework, prioritizes cost-effectiveness and resource optimization. It focuses on minimizing computational costs and resource consumption through techniques like efficient memory management and distributed training strategies. This emphasis on efficiency makes DeepSeek particularly well-suited for training and deploying large-scale AI models in resource-constrained environments. While still under development, DeepSeek’s focus on cost-effectiveness and resource optimization can significantly impact the accessibility and scalability of advanced AI models.

Conclusion

Mixture of Experts (MoE) architecture offers a powerful and efficient approach to scaling model capacity, making it well-suited for large-scale machine learning tasks. While MoE models come with their own challenges, such as training complexity and resource requirements, the benefits of efficiency, scalability, and specialization make them an attractive option for many applications.

Integrating MoE architecture into open-source LLMs represents a significant step forward in the evolution of artificial intelligence. By combining the power of specialization with the benefits of open-source collaboration, MoE unlocks new possibilities for creating more efficient, powerful, and accessible AI models that can revolutionize various aspects of our lives.

The open-source offerings discussed in this article—Switch Transformer, GShard, DeepSpeed-MoE, DeepSeek, and FastMoE—each have strengths and weaknesses. The choice of framework will depend on the specific requirements of the task at hand, including the scale of the model, the available computational resources, and the desired ease of use.

As research in MoE architectures continues to advance, we can expect further improvements in training stability, resource efficiency, and overall performance, making MoE models an increasingly viable option for many machine-learning applications.

Reference:

- OpenMoE – An Early Effort on Open Mixture-of-Experts Language Models: https://arxiv.org/html/2402.01739v1

- DeepSpeed – Mixture of Experts: https://www.deepspeed.ai/tutorials/mixture-of-experts/

- DeepSeek V2 MoE Architecture : https://arxiv.org/html/2405.04434v2#:~:text=In%20order%20to%20tackle%20this,model%2C%20characterized%20by%20economical%20training

About the Author

Bala Kalavala is a seasoned technologist enthusiastic about pragmatic business solutions influencing thought leadership. He is a sought-after keynote speaker, evangelist, and tech blogger. He is also a digital transformation business development executive at a global technology consulting firm, an Angel investor, and a serial entrepreneur with an impressive track record.

The article expresses the view of the author and doesn’t express the view of his organization.